Multimodal Fusion for NextG V2X Communications

Overview:

The V2X communication spans a variety of applications such as collision avoidance safety systems, traffic signal timing, safety alerts to pedestrians, and real-time traffic. In all of the above applications, the communication system must meet the requirement of either low latency or high data rate for safety or quality of service reasons. This motivates the idea of using high band mmWave frequencies for the V2X application to ensure near real-time feedback and Gbps data rates. However, the mmWave band suffers from the high overhead associated with the initial beam alignment step due to directional transmission. We propose using side information from sensor inputs such as GPS, camera, and LiDAR to assist the beam initialization. We highlight the key observations of our research on this webpage with links to further resources. Moreover, we publish different datasets to the research community to pave the way for this new interesting approach.

Download our datasets:

Please use the below links to download the datasets. These datasets were used for the papers mentioned in the "Venue" column. The GitHub repositories are also available for independent validation. Any use of these datasets or GitHub repositories, which results in an academic publication or other publication that includes a bibliography, should contain a citation to our papers.| Paper | Venue | Dataset | Raw Data | Processed Data | Portable Data[*.HDF5] | GitHub | Description |

|---|---|---|---|---|---|---|---|

| 1 | IEEE Transactions on Vehicular Technology | Raymobtime and NEU Dataset | Link | Link | -- | -- | B. Salehi, G. Reus-Muns, D. Roy, Z. Wang, T. Jian, J. Dy, S. Ioannidis, and K. Chowdhury, Deep Learning on Multimodal Sensor Data at the Wireless Edge for Vehicular Network, IEEE Transactions on Vehicular Technology.

|

| 2 | Infocom 2022 | FLASH | Link | Link | -- | Link | B. Salehi, J. Gu, D. Roy, and K. Chowdhury, FLASH: Federated Learning for Automated Selection of High-band mmWave Sectors, in IEEE International Conference on Computer Communications (INFOCOM), May 2022, [Accepted].

|

| 3 | MSN 2021 | Raymobtime | -- | -- | -- | -- | G. Reus-Muns, B. Salehi, D. Roy, T. Jian, Z. Wang, J. Dy, S. Ioannidis, and K. Chowdhury, “Deep Learning on Visual and Location Data for V2I mmWave Beamforming,” in IEEE International Conference on Mobility, Sensing and Networking, Dec 2021.

|

| 4 | -- | e-FLASH | Link | Link | Upcoming | -- | J. Gu, B. Salehi, D. Roy, and K. Chowdhury, Multimodality in 5G MIMO Beam Selection using Deep Learning: Datasets and Challenges. [Under Review]

|

Deep Learning on Multimodal Sensor Data at the Wireless Edge for Vehicular Network:

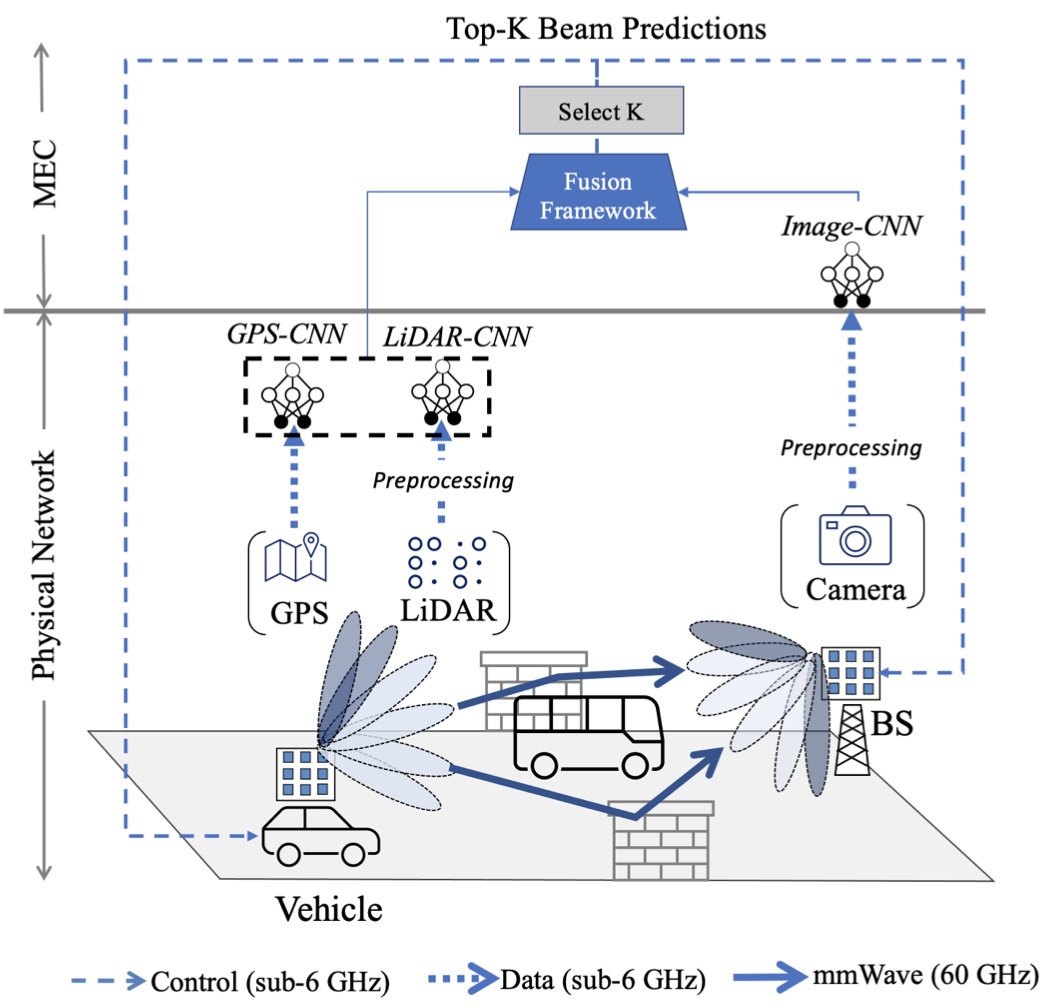

Fig 2: Our fusion pipeline exploits GPS, camera and LiDAR sensor data to

restrict the beam selection to top-K beam pairs.

Keywords: mmWave, beam selection, multimodal data, fusion, distributed inference, 5G.

Abstract: Beam selection for millimeter-wave links in a vehicular scenario is a challenging problem, as an exhaustive search among all candidate beam pairs cannot be assuredly completed within short contact times. We solve this problem via a novel expediting beam selection by leveraging multimodal data collected from sensors like LiDAR, camera images, and GPS. We propose individual modality and distributed fusion-based deep learning (F-DL) architectures that can execute locally as well as at a mobile edge computing center (MEC), with a study on associated tradeoffs. We also formulate and solve an optimization problem that considers practical beam-searching, MEC processing and sensor-to-MEC data delivery latency overheads for determining the output dimensions of the above F-DL architectures. Results from extensive evaluations conducted on publicly available synthetic and home-grown real-world datasets reveal 95% and 96% improvement in beam selection speed over classical RF-only beam sweeping, respectively. F-DL also outperforms the state-of-the-art techniques by 20-22% in predicting top-10 best beam pairs.

NEU Dataset:

The aforementioned paper is validated on synthetic Raymobtime and real-world NEU datasets. The NEU dataset includes multi-modal data and RF ground-truth collected by sensors below.Sensor Modalities:

- mmWave radio: We use TP-Link Talon AD7200 tri-band routers with Qualcomm QCA9500 IEEE 802.11ad Wi-Fi chips as both the BS and Rx at the 60 GHz frequency. The default codebook includes sector IDs from 1 to 31 and 61-63 for a total of 34 sectors; the sectors with IDs of 32 to 60 are undefined. We gain access to PHY-layer characteristics of AP and RX using the open-source Linux Embedded Development Environment (LEDE) and Nexmon firmware patching released by Steinmetzer et al . We record the time-synchronized RF ground truth data as data transmission rate and received signal strength indication (RSSI) at each sector.

- GPS: The MyLincoln Touch On-board GPS provides coordinate readings at sampling rate of 1Hz.

- LiDAR: Velodyne (VLP 16) LiDAR with a FOV of 360 degrees records point clouds at sampling rate of 10Hz.

Dataset Description:

We define four different categories as (a) LOS passing, (b) NLOS with a pedestrian in front of the BS, (c) NLOS with a static car in front of the BS, and (d) NLOS with a car moving between the Rx and the BS with additional variations as shown in the table below.

| Category | Speed (mph) | Scenarios | # of samples |

|---|---|---|---|

| LOS passing | 10 | -- | 1568 |

| NLOS by pedstrain | 15 | standing, walk right to left, walk left to right | 4791 |

| NLOS by static car | 15 | in front | 1506 |

| NLOS by moving car | 20 | 15mph same lane, 15mph opposite lane | 2988 |

FLASH: Federated Learning for Automated Selection of High-band mmWave Sectors

Fig 3: The schematic of proposed FLASH framework for mmWave

vehicular networks, where each vehicle is equipped

with GPS, LiDAR and camera sensors.

Keywords: sector selection, mmWave, multimodal data, federated learning, non-RF data, fusion.

Abstract: Fast sector-steering in the mmWave band for vehicular mobility scenarios remains an open challenge. This is because standard-defined exhaustive search over predefined antenna sectors cannot be assuredly completed within short contact times. This paper proposes machine learning to speed up sector selection using data from multiple non-RF sensors, such as LiDAR, GPS, and camera images. The contributions in this paper are threefold: First, a multimodal deep learning architecture is proposed that fuses the inputs from these data sources and locally predicts the sectors for best alignment at a vehicle. Second, it studies the impact of missing data (e.g., missing LiDAR/images) during inference, which is possible due to unreliable control channels or hardware malfunction. Third, it describes the first-of-its-kind multimodal federated learning framework that combines model weights from multiple vehicles and then disseminates the final fusion architecture back to them, thus incorporating private sharing of information and reducing their individual training times. We validate the proposed architectures on a live dataset collected from an autonomous car equipped with multiple sensors (GPS, LiDAR, and camera) and roof-mounted Talon AD7200 60GHz mmWave radios. We observe 52.75% decrease in sector selection time than 802.11ad standard while maintaining 89.32% throughput with the globally optimal solution.

FLASH Dataset:

The aforementioned paper is validated on real-world FLASH datasets. The FLASH dataset includes multi-modal data and RF ground-truth collected by sensors below.Sensor Modalities:

- mmWave radio: We use TP-Link Talon AD7200 tri-band routers with Qualcomm QCA9500 IEEE 802.11ad Wi-Fi chips as both the BS and Rx at the 60 GHz frequency. The default codebook includes sector IDs from 1 to 31 and 61-63 for a total of 34 sectors; the sectors with IDs of 32 to 60 are undefined. We gain access to PHY-layer characteristics of AP and RX using the open-source Linux Embedded Development Environment (LEDE) and Nexmon firmware patching released by Steinmetzer et al . We record the time-synchronized RF ground truth data as data transmission rate and received signal strength indication (RSSI) at each sector.

- GPS: The MyLincoln Touch On-board GPS provides coordinate readings at sampling rate of 1Hz.

- Camera: Camera sensor (GoPro Hero4) with a field-of-view (FOV) of 130 degrees provides video readings at sampling rate of 30fps.

- LiDAR: Velodyne (VLP 16) LiDAR with a FOV of 360 degrees records point clouds at sampling rate of 10Hz.

FLASH testbed:

First, propriety IP addresses are assigned to each router. Then, the open-source iPerf3 tool is used to set the Tx-Rx pair as ``client'' and ``server'', respectively, and generate the stream to be transmitted. We use patched LEDE firmware to record the time-synchronized RF ground truth. The GPS and LiDAR sensors are connected to the onboard computer in the vehicle using low latency Ethernet cables and assigned unique IP addresses. The computer runs the Robot Operating System (ROS) suite, which reads measurements instantaneously and writes them into ROS bag files. The GoPro cameras are also connected using Wi-Fi and USB cables for Hero4 and Hero9, respectively, for simultaneous operation. We use a customized Python code employing OpenCV to control the camera recordings, labeling them with the appropriate timestamps. Note that all sensors are connected to the same computer to ensure that the same clock reference is used for time stamping.

Dataset Description:

We define four different categories as (a) LOS passing, (b) NLOS with a pedestrian in front of the BS, (c) NLOS with a static car in front of the BS, and (d) NLOS with a car moving between the Rx and the BS with additional variations as shown in the table below.

| Category | Speed (mph) | Lane | Featuring | Scenarios | # of episodes | # of samples |

|---|---|---|---|---|---|---|

| 1 | 10, 15, 20 | same, opposite | - | -- | 60 | 9729 |

| 2 | 15 | opposite | pedestrian | standing, walk right to left, walk left to right, walk back to front, walk front to back | 50 | 7968 |

| 3 | 15, 20 | opposite | static car | on right, on left, in front | 60 | 8174 |

| 4 | 15, 20 | opposite | moving car | 10mph same lane, 20mph same lane, 10mph opposite lane, 20mph opposite lane | 40 | 6052 |

Deep Learning on Visual and Location Data for V2I mmWave Beamforming:

Fig 5: Visual and location data are combined using data fusion techniques.

Different neural networks are used to process each data modality,

and are ultimately combined with the fusion neural network to

(i) aid the beam selection and (ii) detect the blockage.

Keywords: mmWave, beam selection, machine learning, deep learning, fusion, multi-modal data, 5G.

Abstract: Accurate beam alignment in the millimeter-wave (mmWave) band introduces considerable overheads involving brute-force exploration of multiple beam-pair combinations and beam retraining due to mobility. This cost becomes often intractable under high mobility scenarios, where fast beamforming algorithms that can quickly adapt the beam configurations are still under development for 5G and beyond. Besides, blockage prediction is a key capability in order to establish mmWave reliable links. In this paper, we propose a data fusion approach that takes inputs from visual edge devices and localization sensors to (i) reduce the beam selection overhead by narrowing down the search to a small set containing the best possible beam-pairs and (ii) detect blockage conditions between transmitters and receivers. We evaluate our approach through joint simulation of multi-modal data from vision and localization sensors and RF data. Additionally, we show how deep learning based fusion of images and Global Positioning System (GPS) data can play a key role in configuring vehicle-to-infrastructure (V2I) mmWave links. We show a 90% top-10 beam selection accuracy and a 92.86% blockage prediction accuracy. Furthermore, the proposed approach achieves a 99.7% reduction on the beam selection time while keeping a 94.86% of the maximum achievable throughput.

Multimodality in 5G MIMO Beam Selection using Deep Learning: Datasets and Challenges

Fig 6: Use cases and overview of real-world

FLASH, e-FLASH, and synthetic Raymobtime datasets.

Keywords: Multimodal Data, 5G, MIMO, Non-RF Data.

Abstract: The increasing availability of multimodal data holds many promises for developments in 5G-and-beyond multiple antenna systems by harnessing the potential for enhanced situational awareness. Specifically, inclusion of non-RF modalities to complement RF-only data in communications-related decisions like beamforming may speed up decision making in situations where an exhaustive search spanning all candidate options is required by the standard. However, to accelerate research in this topic, there is need to collect datasets in a principled manner and then represent them with standard metadata fields. This paper presents an experimentally-obtained dataset, composed of 23 GB of data collected over 3 days in open-street scenarios in downtown Boston, that aids in beamforming in vehicle to everything (V2X) millimeter wave (mmWave) bands, with the goal of facilitating machine learning (ML) in wireless communication required for autonomous driving. Beyond a specific example, this paper describes methodologies of creating such datasets that use time-synchronized and heterogeneous types of LiDAR, GPS, and camera image data, paired with the RF ground truth data of selected beams in the mmWave band. While we use beamforming as the primary demonstrator, we also discuss how multimodal datasets may be used in ML-based PHY-layer optimization research including localization and handover.

e-FLASH Dataset:

The aforementioned paper is validated on real-world e-FLASH datasets. This dataset is an extension of the FLASH dataset with two extra sensors.Sensor Modalities:

- mmWave radio: We use TP-Link Talon AD7200 tri-band routers with Qualcomm QCA9500 IEEE 802.11ad Wi-Fi chips as both the BS and Rx at the 60 GHz frequency. The default codebook includes sector IDs from 1 to 31 and 61-63 for a total of 34 sectors; the sectors with IDs of 32 to 60 are undefined. We gain access to PHY-layer characteristics of AP and RX using the open-source Linux Embedded Development Environment (LEDE) and Nexmon firmware patching released by Steinmetzer et al . We record the time-synchronized RF ground truth data as data transmission rate and received signal strength indication (RSSI) at each sector.

- GPS: The MyLincoln Touch On-board GPS provides coordinate readings at sampling rate of 1Hz.

- Hero 4 Camera: Camera sensor (GoPro Hero4) with a field-of-view (FOV) of 130 degrees provides video readings at sampling rate of 30fps mounted towards BS (side-facing).

- Hero 4 Camera: Camera sensor (GoPro Hero9) with a field-of-view (FOV) of 130 degrees provides video readings at sampling rate of 30fps mounted towards the vehicle (front-facing).

- Velodyne LiDAR: Velodyne (VLP 16) LiDAR with a FOV of 360 degrees and 16 channels records point clouds at sampling rate of 10Hz.

- Ouster LiDAR: Ouster (OS1-64) LiDAR with a FOV of 360 degrees and 64 channels records point clouds at sampling rate of 10Hz.

Dataset Description:

We define four different categories as (a) LOS passing, (b) NLOS with a pedestrian in front of the BS, (c) NLOS with a static car in front of the BS, and (d) NLOS with a car moving between the Rx and the BS with additional variations as shown in the table below.

| Category | Speed (mph) | Lane | Featuring | Scenarios | # of episodes | # of samples |

|---|---|---|---|---|---|---|

| 1 | 10 | same | - | -- | 10 | 1568 |

| 2 | 15 | opposite | pedestrian | standing, walk right to left, walk left to right | 30 | 4791 |

| 3 | 10 | opposite | static car | in front | 10 | 1506 |

| 4 | 15, 20 | opposite | moving car | 15mph same lane, 20mph opposite lane | 40 | 2988 |

Team:

Advisors:

Students: